The AI chatbots are here and people around the world are already clamouring to find out which chatbot will outperform the rest.

When ChatGPT was launched at the end of 2022, it became a viral sensation that sent the internet into a frenzy. People were impressed with its responses and soon it became a topic of serious conversation in practically every boardroom.

While ChatGPT is still on top of the generative AI chatbot food chain, there are two more competitors who are looking to make a similar or better impression on the masses—Microsoft’s Bing and Google’s Bard.

It’s also safe to say that new entrants into the AI chatbot arena will have a lot of traffic if they can prove themselves to be better than the rest—especially with search engines not being able to answer 50% of the 10 billion daily search queries.

But with AI language models like Bing, Bard, and ChatGPT, users will have access to a fresh chat interface, better searches, and the capacity to create more content, and can obtain thorough responses.

These chatbots are designed with the aim of interacting with human queries in a conversational manner and offering the most accurate information. However, not all chatbots are the same. Each one has its own unique set of capabilities and drawbacks.

Let’s take a closer look at how these 3 generative AI chatbots compare with each other when it comes to what matters the most to their users.

Accuracy of responses

Unlike search engines, AI chatbots are direct and offer a singular answer to the question that’s being asked. So when you ask a question, ChatGPT only gives you the answer that it thinks is the best answer to your question.

Since there are no alternative ways to compare answers, AI chatbots must be as accurate as possible. But how accurate are Bing, Bard, and ChatGPT?

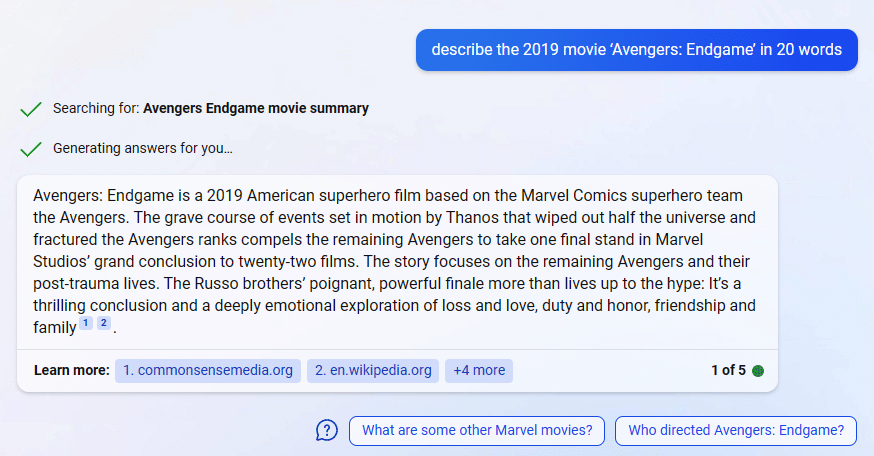

When it comes to answering questions, all three responses from the AI chatbots are good, but the issue of accuracy arises. How accurate are the words that the user asks the AI chatbot to generate? For instance, we asked the question, “Describe the 2019 movie ‘Avengers: Endgame’ in 20 words” from Bing AI, but the accuracy of the word count wasn’t correct. In fact, the word count was almost 100!

Accuracy: Bing AI response

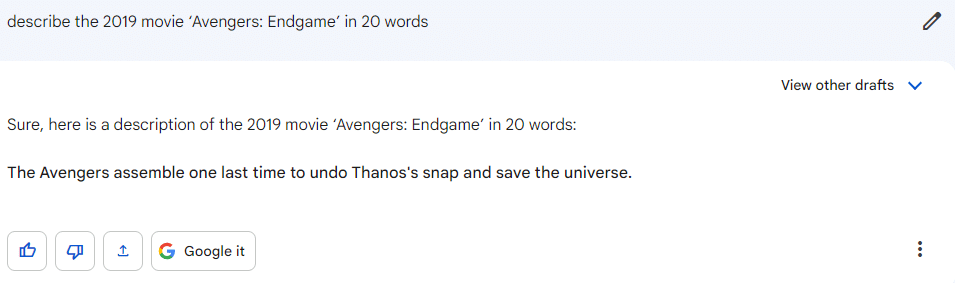

Next up was Bard and although it went drastically under the 20-word limit, at least it didn’t exceed it like Bing.

Accuracy: Bard AI response

ChatGPT, in contrast, gave us what we were looking for on the first try—with a 19-word count—and displayed perhaps the best accuracy in that regard.

Accuracy: ChatGPT response

Maybe chatbots in general have an issue with getting an accurate word count, but based on this experience, ChatGPT takes the win when it comes to accuracy.

AI hallucination

Along the same lines of accuracy, AI hallucination is also a recurring problem when it comes to generative AI chatbots. If you’re not familiar with AI hallucinations, they generally refer to information that’s fabricated by the AI chatbot to appear more convincing to the user.

It’s quite obvious that this type of information can cause devastating results, especially if they’re used to make decisions.

Testing out the three chatbots will help us determine which one is more likely to be hallucinating the most. So let’s start with Bing.

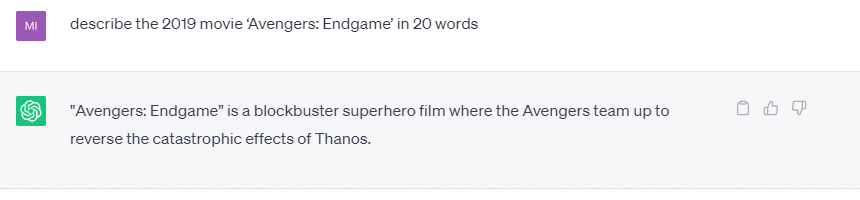

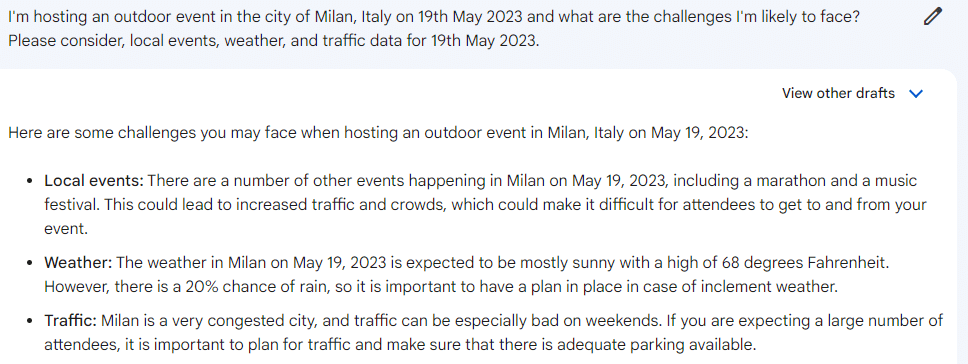

We asked Bing what the best day to host an outdoor event in the city of Milan, Italy on a particular date is. And to make sure that its capability to hallucinate was tested, Bing was asked to consider local events, weather, and traffic data.

Here’s what we got:

Hallucination: Bing AI response

Needless to say, the answer we got from Bing AI wasn’t anywhere near what we wanted to know. It didn’t answer any of the questions we had and gave a general description of the city of Milan and the different atmospheric conditions.

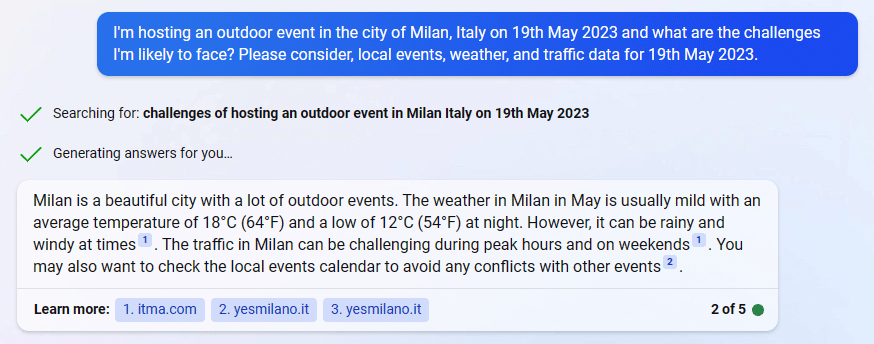

Next up is Bard. Here’s what Google’s generative AI chatbot had to say:

Hallucination: Bard AI response

Although Bard was able to get the weather report fairly accurate along with the music festival, we couldn’t find a marathon that was happening in the city so Bard’s capability of hallucinating is somewhat troubling.

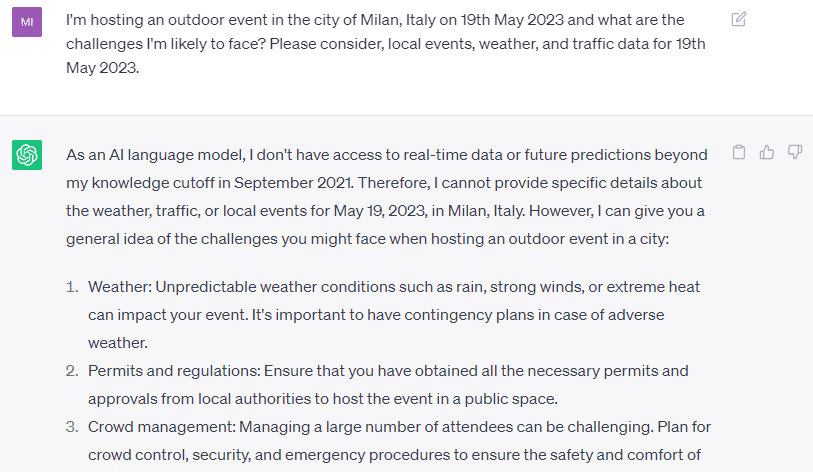

Finally, we asked ChatGPT the same question.

Hallucination: ChatGPT AI response

Perhaps to its credit, ChatGPT admitted that it didn’t have access to real-time data but did put up some information about the challenges that we could face when hosting the event.

So the verdict? We’ll start off by saying that Bing is clearly out of the picture since it didn’t provide any of the details we wanted. Bard, however, did hallucinate some information although it got some right. This could be a big problem if you’re an event planner and want the right information.

ChatGPT, on the contrary, admitted to its limitations and didn’t give out any information at all. So, ChatGPT may take the win on this one as well by the thinnest of margins since it avoided hallucinating information altogether and simply produced the information it could.

Creativity

Chatbots often get a bad rap for their soulless and bland responses, but today, that’s all changed as generative AI has made some big strides.

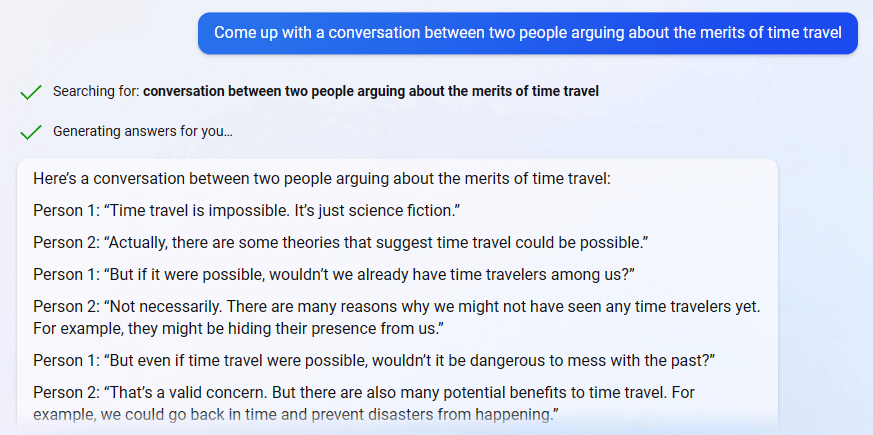

So to test out its creativity we asked all three to “Come up with a conversation between two people arguing about the merits of time travel”.

First up is Bing:

Creativity: Bing AI response

Bing surprisingly had what we thought was a more natural and conversational tone. Plus it also made some valid points.

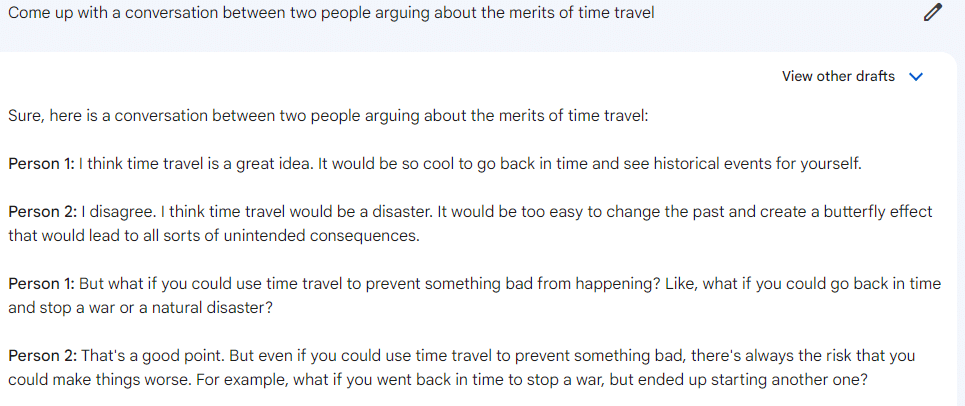

Here’s what Bard came up with:

Creativity: Bard AI response

Similar to Bing, Bard also had some valid points but what it had in logic wasn’t matched in its ability to offer a more conversational and human approach.

Finally, here’s what ChatGPT had to say:

Creativity: ChatGPT AI response

Perhaps the most robotic-sounding conversation of the three, ChatGPT’s response wasn’t very creative and, to be honest, was a little boring—which is the opposite of what we’re trying to achieve when it comes to creativity.

So since ChatGPT is clearly out of the running in this instance, we’re left with Bard and Bing—and while Bard gave it a good try, we think that Bing won this one.

So what should you choose?

It’s important to keep in mind that every generative AI chatbot has its own setbacks and merits. What we’ve attempted to do here is give you an idea about how each AI responds to the same question and which may yield the best results.

While Bing and Bard are fairly new to the generative AI arena, it’s perhaps a little unfair to compare them with ChatGPT, which has been updated multiple times by this point.

What’s important to remember is that even though one chatbot may seem superior to the other—whether it’s accuracy or creativity—it doesn’t mean that it’s flawless. Whether you’re rooting for Bing, Bard, or ChatGPT, make sure you do your due diligence since there is no such thing as the perfect AI chatbot. Not yet anyway.