Google’s love for AI was on full display at Google’s annual I/O Conference held on 10th May 2023 when Sundar Pichai revealed the tech giant’s latest venture into incorporating generative AI in Google search.

Google is on a mission to catch up with Microsoft, which launched its GPT-powered Bing earlier this year as a way to challenge Google’s dominance on search—since then Bing has been able to claw back some market share—and is calling its latest innovation in search “AI Snapshots”.

Unfortunately, users will have to wait to try out the improved AI search engine, as Google is planning a staggered release of the new feature. For now, only US users can join a waitlist on Search Labs to try out AI Snapshots.

What’s Google AI Snapshot?

In simple terms, it’s designed to provide users with fast and accurate answers to complex questions that would traditionally require the user to conduct multiple Google searches over several hours.

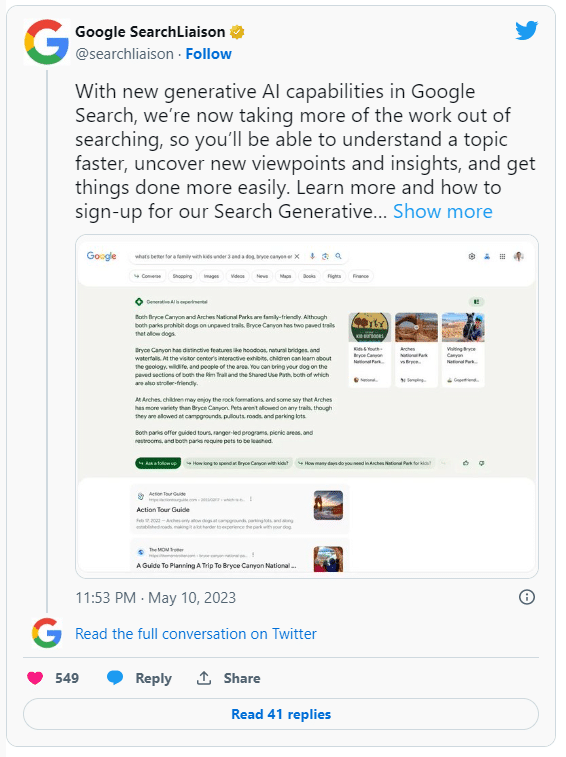

Here’s how you’ll be able to view the new search engine once it’s available to users:

Source: Google SeaerchLiaison’s X post

Directly under the snapshot, users will be able to see the suggested next steps and can even ask any follow-up questions. When you click on these, you’ll be taken to a conversation mode where you can ask Google for additional information about the topics you’re exploring.

One of the most promising features is that context from one question will be carried to the next question to help you carry out your exploration in a more natural way. It will even give you a range of perspectives that you can dive into for future details.

So let’s go through the main features that Google’s generative AI offers and we’ll also give you our opinion about what this could all mean for you.

Shop with Google’s generative AI

According to Google, the user’s shopping experience is about to get a whole lot faster and easier. Now this is great since over 2.6 billion digital shoppers are crawling the internet looking for the best offers and if Google’s generative AI can improve the shopping experience, that’s a slam dunk!

When users are searching for a product they can get a quick view of the important factors to consider and the products that fit what they’re looking for. You’ll have access to product descriptions that have up-to-date reviews, prices, ratings, and even product images.

So how’s this possible? Because the generative AI is built on Google’s Shopping Graph which includes an excess of 35 billion product listings. This is arguably the world’s most comprehensive dataset that gives people reliable and up-to-date results.

Hear from different sources and get different perspectives

Let’s face it. We’d rather hear about someone’s experience with a product or service before we make our own purchasing decisions. Knowing this, Google has designed experiences that help users find the right content on the web and make it a cinch for people to dive deep into what they’re looking for.

Google also doesn’t overlook the importance of ads and they help users find the products and services that they’re searching for. With one of the most asked questions being whether ads will appear in Google’s new search results, the answer is a clear and resounding ‘Yes!’

These ads will continue to appear in dedicated slots and the tech giant will continue to make sure that search ads can be distinguished from organic search results.

Google’s responsible approach to generative AI

While Google has made it abundantly clear that it wants to keep advancing its progress in AI, they aren’t ignoring the potential limitations. They’re taking a slow and responsible approach since they’re focusing on upholding the high quality of the search results and will continue to make continuous improvements.

As Google has improved their systems over the years, they’re adding more guardrails such as limiting the types of questions where these generative AI capabilities will appear.

If users want to evaluate the information they can expand their view and get deeper insights into how the response was developed making the process more transparent.

What have we learned about Google Snapshot so far?

Through its Search Generative Experience (SGE) testing, Google has shared that people like to know about the topics they’re interested in by asking follow-up questions. Owing to this feedback, Google is experimenting with new and simpler ways to ask questions from search results pages.

For users to make this work in Google Snapshot, they just have to enter their follow-up questions in the “ask a follow-up” fields that can be found all over the Google search results page. Check out the image below to see how it appears.

Source: Medium.com

Generative AI will also be used to make the process of translation much more seamless and efficient. This is because words can have more than one meaning which can create ambiguity and lead to an inaccurate translation.

With generative AI, Google AI search users no longer have to guess what translations mean. If a word has more than one meaning, it will be underlined so that users can click on the word and select the specific meaning they want it to convey, similar to what’s shown below.

Source: Google

While the feature isn’t ready for users to test out just yet, it will be coming to the US for English-to-Spanish translations and more countries and languages will be added in the future.

What we think Google’s new AI Snapshot means for you

We don’t want to get ahead of ourselves—especially since AI Snapshot has yet to be used by real users. With all signs pointing to Google still updating the AI to make it as close to perfect as possible before it’s open to US users for trial runs, it’s safe to say that the search engine giant is committed to making their contribution to AI top quality.

The information available, however, promises to be a step in the right direction as far as users are concerned. And with in-depth, more transparent features, users can understand how their results are generated which gives more legitimacy to the generative AI.

Our first impression based on everything we know is that Google’s AI Snapshot is going to be a formidable adversary for other players in the generative AI space since Google is using its impressive datasets to give users an experience unlike any other.

Having said that, it’s wise to have an open mind until we have the chance to experience the new generative AI search engine for ourselves and see if the new transformation is actually helping users find what they’re looking for faster and easier—as promised, or whether it leaves much to be desired.

How you can get started with AI

Google Snapshot may seem a bit alien if you’re not using AI and leaves you with more questions than answers. So let’s take a quick look at how you can put on your AI hat and make it a part of your business.

If you’re working with UX designers, for instance, then it’s a fair assumption that their role will change significantly with the rise of generative AI. UX designers used to engage in designing interfaces that made it easier for users to navigate your content, but with the dawn of generative AI, this role has expanded and now includes designing interfaces that promote the interaction with ‘smart’ content.

Just like AR/VR design, web3, or any other technology that’s making a splash, your UX team will need to build a hands-on AI product experience. Here are two ways your UX designers can do that.

Acquiring foundational knowledge in AI: The first thing you need to do is understand neural networks, deep learning, and machine learning. Trying to understand new tools like Google AI Snapshot can be tough if you don’t have the building blocks to put it all together.

Whether it’s racking up on YouTube videos, podcasts, or even articles put together by industry leaders, take the time to learn about AI and where the industry is headed because not paying attention could cost you a lot more than you think in the long run.

Design interfaces that enable interaction with AI-generated content: The world of AI can be cutthroat as everyone tries to become market leaders and get the advantage of being the first movers into the world of AI. Gaining exposure and building real UX skill sets can be what sets you apart from the rest.

There are many AI tools out there that are freely available to get you started. The key is to get real hands-on experience with a strong AI-driven product. Once you have the foundational knowledge you need, it becomes easier to connect the dots and design interfaces that give users the ability to interact with AI-generated content and enhance their experience.

While AI may not be everything that the future has to offer, it is important to not be intimidated by it. Embrace the potential it has and don’t be afraid to create innovative, engaging, and user-friendly interfaces that the future can offer.